Not long ago, we celebrated the world wide web’s 30th birthday. The creator of the World Wide Web – Tim Berners-Lee – said his brain-child is still in its awkward teenage phase. When looking at the problematic personality of the internet in all of its glory – the datafication, centralization, and balkanization – no one would dare to argue with him.

When looking aside from the “quirks”, the story does not get much better. The Snowden case portrays with impeccable precision how vulnerable internet can actually be.

To steer away from the internet that moves data in the form of classical bits – a stream of electrical or optical pulses representing 1s and 0s – the scientists at QuTech are working on an unhackable quantum internet that could move particles in a quantum state. Meaning that the particle would represent a combination of 1 and 0 simultaneously.

The classical bits, came about with the predecessors of the internet – packet switching. Namely, it broke the data into suitably-sized pieces that could be transferred fast and efficiently via network devices. This made it possible to send a file from one computer to another with very limited data transmission capabilities.

How Easy-to-Use Software Came to Be

Janet Abbate writes in her book “Inventing the Internet” how in the early 60s, two computer researchers –one in the US and one in the UK – were working on packet switching during the same time period in very different contexts.

Paul Baran, the scientist at the Rand Corporation in the US, proposed a network where the computers had to divide users’ messages into packets and reassemble the incoming packets into messages. The innovation surfaced in the context of the Cold War.

The Rand Corporation was dedicated to research strategy on military strategy and technology. Since both the US and USSR were building nuclear ballistic missile systems and the communication was center-dependent, it meant that one missile could wipe out a whole communication network. The US military needed a system that would be survivable. A packet switching network that depended on many stations and could use the stations and nodes available, would be just that.

Cold War Problem Solving

Thus, Baran’s aim was to create a system that would survive under hostile conditions and it had many elements that were specifically adapted for Cold War threat: very high levels of redundancy, location of nodes away from the population centers, integration of cryptographic capabilities, and priority/precedence features. It was a communication system that could endure a war.

On the other hand, the computer scientist in the UK – Donald W. Davies – had completely different problems to solve. Namely, when the Americans were troubled with the science gap between them and the USSR, the people in the UK were worried about the technology gap between them and the US. The UK was suffering what has been called a “brain drain”. The UK was in economic malaise and falling behind in the exploitation of new technologies.

Harald Wilson, the prime minister of the United Kingdom aimed to solve this problem with a new economic and technological regime. He moved resources away from the unproductive defense industry and other areas such as aerospace and nuclear energy and applied newly mined resources to commercial applications.

Technological Shifts

The field that got all the extra attention and the funding through the shift was computing. And Davis was one of the scientists working on building up the British computer industry.

Since in the UK the focus was not on winning a war but on economic growth, what was expected of a computer scientist was entirely different than it was in the US. Instead of concentrating on building a communications system that would have a high rate of survivability, Davis’ priority was interactive computing.

The computers in the early 60s were big and expensive but slow, tedious, and difficult to operate for the user. Although the processing issues that made computers slow were to an extent solved with time sharing, there was still the problem of inadequate data communications.

Davis, having a background in computing, saw a solution in packet switching. However, he didn’t try to build a network that would survive it all as Baran did. He tried to build a network that would provide access to scarce resources and provide affordable interactive computing.

Davis built the network for business people since he saw how that could help to boost the economy of the UK. Through the early version of the network, the users could access computers for things like for writing and running programs, but also using services and applications like “desk calculator”, “communication between people”, and “scrapbook”.

Flexibility/Usability Tradeoff

However, most – if not all – computer users back in the 60s were scientists that had the skills and knowledge to work with computers and networks that weren’t particularly user-friendly. One had to put in effort to get things done. And for a novice business user, it wouldn’t make sense to use a system that was both difficult to learn and operate.

Davis aimed to salvage it with making the system easier to use but sacrificed a degree of flexibility and adaptability with it. Janet Abbate writes in her book: “For example, they implemented parts of the user interface in hardware. A user wishing to set up a connection would punch a button marked TRANSMIT on the front of the terminal, after which a light labeled SEND would light up to indicate that the network was ready to accept data; there were other lights and buttons for different operations. This interface was easy for novices to learn, but it was harder to automate or modify than a procedure implemented in software would have been.”

That might have been one of the first biggest and more prominent cases of flexibility-usability trade-offs in the history of computing. Meaning, as the flexibility of a system increases, its usability decreases.

User-centric Software Can Not Equal Flexible Software?

Today, user-centric design in both services and products is the highest goals. Since the perceived ease of use and usefulness can predict the intention to use software and in the end the actual usage, considering the actual user definitely has its benefits.

It’s clear that the more straight forward the software the easier it is to get yourself settled. Let’s take Twitter. It’s a social networking site to share news and ideas about what is happening when it’s happening. Basically, to join in on a conversation, all you need to do is create an account.

However, when the software offers you more than just one option, it’s bound to get trickier. Let’s take Tweetdeck – a Twitter extension that is built for marketers so they could do their job easier. You can simply join in on any Twitter conversations there too, but there is so much more than you can set up. You can schedule tweets and set up boards, follow hashtags and keywords, see what people are saying about your brand. To get to a system that works for you, you have to put some effort in.

Flexible Project Resource Management Software

However, while with more flexible software, setting up process can take more effort, it doesn’t have to mean that the work itself won’t be easier than it would be with software that brings a bit of complexity at first with its flexibility.

It’s the same with resource planning software. It might seem easier to schedule resources using a plain Excel spreadsheet where all you need to do is paste all your resources and you can start merging and coloring cells. However, when you are not keeping track of all the additional information about your resources, tasks, and projects, the planning process itself might be a lot more tedious.

Let’s say each of the project managers in your organization is using their own spreadsheet system and then a general resource manager is making sure that the resources are optimally utilized. As spreadsheets cannot be easily updated, the changes will delay. A team member cannot just log in and change the task status once a delay has happened and another manager might book them with a new task.

Now, the resource manager has to solve a resource conflict that could be easily prevented with a resource management software. They will have to go over the problem with both of the managers and the team member, delay the plans made or allocate additional resources. That’s why easy to set up does not always equal easy to use.

Ganttic: Flexible + User Friendly

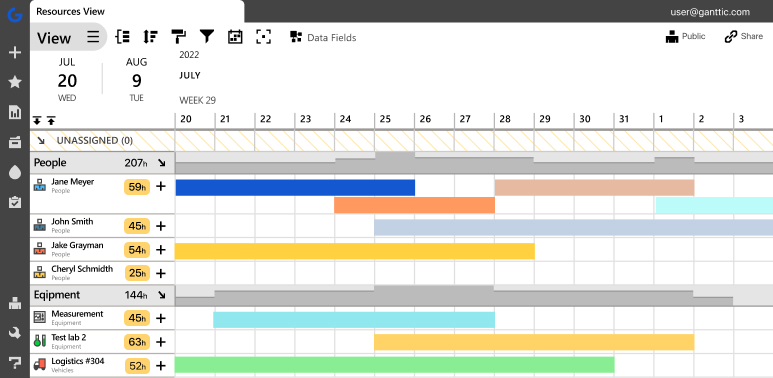

Our users often perceive Ganttic as a resource management tool that’s easy to use. They say that using Ganttic, they can keep track of the employees and equipment with ease. That Ganttic is both accessible, easy to update, and easy to understand.

Nevertheless, there are parts of Ganttic that are far from easy. Perhaps the most difficult part is getting started. It’s the darn flexibility that’s making it so complicated. It’s not enough that resource management is never done and always an ongoing process, it’s also different for each organization.

Our clients almost never have the same type of custom data added to their resources, projects, and tasks. They have the same approach to the resource planning process only on rare occasions. When the software allows you to fit your data in it and use the data the way you prefer to, it’s without a doubt a tricky process to get going.